Startup update 20: LLM Zombies

2025-09-24

Tagged: cartesian tutor, llms

Progress update

This week, I worked on building a “Review problem with AI” function that would import any question on my website into an AI for an AI-guided interactive solve experience. I originally thought that this would be just a convenience feature, so that students wouldn’t have to go figure out how to copy-paste the problem (and all associated images) into ChatGPT if they wanted a solution.

Then, I tried using it, and found that Claude Sonnet 4 would simply get the answer wrong about 5% of the time, and would generate bullshit explanations about 30% of the time. These are relatively subtle mistakes, too - many chemistry majors and even PhDs would likely make similar mistakes! I tried GPT-5 pro and Opus 4.1 but none were really satisfactory. The current best recourse is to manually review the AI-generated solution at a great time expense. I’ll continue exploring because it is not sustainable for me to spend ~ 4 hours reviewing/correcting solutions for each exam. (It’s not easy work, either - those 4 hours sap my mental energy enough to make the rest of my day zero-productivity.)

Props to the USNCO problem authors for so reliably coming up with LLM-fooling problems.

On the plus side, all this labor so far has generated a plethora of “look how LLMs screw this up” fodder for a series of blog posts / marketing materials that might drive more students to my site. TBD whether I have to learn how to use TikTok or whether I’ll find a different platform to market on.

It is slightly surreal to me that I am seeing this rate of bullshit in cutting-edge foundation models at the same time that these companies are raking in gold medals at the IMO/IOI. There is definitely a disconnect here. Maybe what’s going on is that the IMO/IOI models are massively parallelized, with thousands of extended reasoning attempts, and tiered models consuming each other’s output to try and distill and adjucate between multiple attempts of unknown correctness into a final solution. It cannot possibly be the same models available to mere mortals?

Anyway, if you work at a major AI training company and want to buy some high-quality solution tokens, let me know at brian@cartesiantutor.com. See my new Cartesian Tutor blog for an example of what these solution tokens might look like.

LLM Zombies

If I had to broadly describe the errors I’m seeing, I would simply say: LLMs are pattern-matching zombies, without any internal world model.

- ~50% of errors were due to misreading images and diagrams - in line

with my observations at LLMs are Blind.

- LLMs misidentify glassware types (e.g. they mix up burette, condenser, and addition funnel, which all look like upright cylinders); I could see them not making this mistake next year.

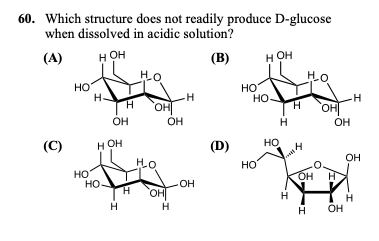

- LLMs jump to conclusions about what they’re seeing; in this problem, they make assorted mistakes, like assuming the furanose form of glucose in option (D) is fructose, or somehow thinking they’re looking at a disaccharide. This is probably related to the contextless image encoding architecture.

- LLMs also fail to “see” 2D renderings of molecules in 3D space, which is a problem I don’t expect to be solved in foundation models for at least 5-10 years.

- When I retried some of these problems with GPT-5, Claude Opus 4.1, Gemini 2.5 Pro, they struggled with many of these questions. For example: when asked whether a reaction had a high entropy change, Opus 4.1 would claim that \(\ce{C60 (buckyball) -> 60C (graphite)}\) generated 59 net particles, so therefore it was high entropy. However, each sheet of graphene binds together far more than 60 carbon atoms, on average, so the net number of particles would go down. GPT-5 and Gemini-2.5 Pro did not make this particular mistake but made others.

- I also saw mistakes where an LLM would claim that a factor was negligible because it was so small. That might be true if it was simplifying an addition \(x + \epsilon \approx x\), but here, it was actually simplifying a multiplication \(x\epsilon \approx x\).

Many of these issues are just… really, completely inexcusable mistakes, from a human point of view. Well, at least there’s a business for me to build.